Science fiction writers have always speculated about the future -- sometimes they are quite accurate (Jules Verne predicted live newscasts, space travel, and the Internet in the 19th century) and other times not so much (flying cars haven't taken off yet). Authors typically set their science fiction stories well into the future, so they can get in a few good years of sales before the future becomes the present and their works begin to look naïve and obsolete. Far-future settings also allow an author to solicit a greater suspension of disbelief from the reader -- sure, warp drives and holodecks sound fanciful, but who's to say what wonders may be common in 400 years? However, occasionally authors will accept the difficult challenge of setting their stories in a near-future world similar to our own, where predictions about technological advancement must be grounded in reality and "science as magic" hand waving is not tolerated. It is these stories, when written by authors with expertise in the subject matter and the willingness to conduct research, that provide invaluable inspiration to those of us in the business of turning imagination into reality.

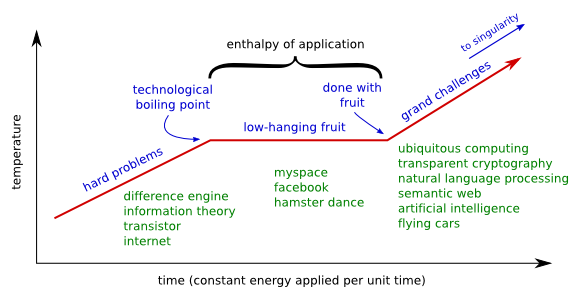

Vernor Vinge's Rainbows End and Charles Stross's Halting State are two recent books that do an excellent job of illuminating possible future paths of software technology. Both books are written by authors who are experienced in the art of software (Vinge is a computer scientist, and Stross is a recovering programmer), and both books express a very specific vision of the next steps in software based on current technology and well understood trends. These books describe a future where ubiquitous computing has become a reality. Computers have mostly disappeared into the woodwork, keyboards and mice have given way to gestures, and users live in an information-rich augmented reality world where electronic eyewear overlays data onto everyday scenes in useful, and sometimes not so useful, ways.

Rainbows End is set in the year 2025, and is told from the perspective of a recently cured Alzheimer's survivor who must come to terms with the changes in the world around him, while unknowingly becoming involved in an international conspiracy. The common operating systems and user interfaces of today, such as Microsoft Windows, are no longer commercially viable (except as legacy interfaces for old people) and have been replaced with a product called Epiphany which ties the new technologies together. Computer hardware is sown into the fabric of clothes, and the display device of choice is a pair of electronically enhanced contact lenses. As far as the story goes, I don't think this is Vinge's best work, and I found the bits about the life-extended elderly being "retrained" for new jobs alongside high school students to be somewhat hokey. However, for idea-obsessed readers, the incredible detail given to technological speculation makes up for the weak story, and I can't resist a book whose most clever chapter has one of the best titles ever: "How-to-Survive-the-Next-Thirty-Minutes.pdf". If you want a taste of Vinge's writing with a better story line, I'd recommend the excellent Marooned in Realtime.

The story of Halting State takes place in the year 2018, and revolves around a software engineer at a company that produces massively multiplayer online games. This character finds himself unwillingly tangled in a complex web of international intrigue that is made possible by the ubiquitous computer technology portrayed in the book. While Halting State describes a similar ubiquitous computing environment as Rainbows End, the hardware speculation is less ambitious -- instead of wearable computers, mobile phones are the principle unit of personal computing, and eyeglasses provide the augmented reality overlay instead of contact lenses. Halting State is unconventionally written in the second person, as a homage to early computer adventure games. While I know at least one person who found this to be distracting, the second person prose became natural and unnoticeable for myself and others after the first chapter or two.

I found a couple of the technological predictions presented in these books to be particularly clever. In the world of Rainbows End, transparent cryptography has encouraged the development of sophisticated economic systems. The authenticity provided by digital signatures and certificates not only protects the movement of money, but allows contracts for specific goods and services to be anonymously subcontracted in real time without the involvement of lawyers. The anonymity allows arbitrary individuals to interact with the economy in various agreed-upon roles -- a concept that software engineers refer to as the "separation of interface and implementation." The efficiency makes possible rich systems of multi-level affiliations, with each level providing value in well-defined ways. These new systems raise issues of their potential for criminal abuse, which are explored in the book. In the following excerpt from Vernor Vinge's Rainbows End, Juan Orozco is subcontracting a task -- which he himself was subcontracted anonymously from a third party -- to Winston Blount, who verifies the authenticity of the payment promise by checking its digital signature which is certified by a trusted third party -- in this case, Bank of America:

"Y-you know, Dean, I may be able to help. No wait -- I don't mean by myself. I have an affiliance you might be interested in."

"Oh?"

He seemed to know what affiliance was. Juan explained Big Lizard's deal. "So there could be some real money in this." He showed him the payoff certificates, and wondered how much his recruit would see there.

Blount squinted his eyes, no doubt trying to parse the certificates into a form that Bank of America could validate. After a moment he nodded, without granting Juan numerical enlightenment. "But money isn't everything, especially in my situation."

"Well, um, I bet whoever's behind these certs would have a lot of angles. Maybe you could get a conversion to help-in-kind. I mean, to something you need."

In Halting State, the intersection of refined peer-to-peer networking protocols, massively parallel execution contexts, and the increasing computational power of hand-held devices has led to the development of the Zone, an advanced distributed computing environment which relies on processing power provided by the mobile phones of its users. The phone-based Zone nodes are implemented as virtual machine sandboxes which execute distributed code written in the "Python 3000" language. In the following excerpt from Charles Stross's Halting State, the protagonist explains how the Zone uses cryptography to protect assets in online games:

“Zone games don't run on a central server, they run on distributed-processing nodes using a shared network file system. To stop people meddling with the contents, everything is locked using a cryptographic authorization system. If you ‘own' an item in the game, what it really means is that nobody else can update its location or ownership attributes without you using your digital signature to approve the transaction -- and a co-signature signed by a cluster of servers all spying on each other to make sure they haven't been suborned.”

Both Vinge's and Stross's visions of software's future rely on cryptographic systems which are more ubiquitous and transparent than most of the systems in use today. While secure web transactions have been quite successful and have largely met the goal of transparently verifying the authenticity of the web server, use of certificates by individuals to prove their authenticity to others (whether via the web, email, IM, or other protocols) is extraordinarily rare except among extreme power users and certain high-security corporate environments. Bringing the full power of cryptography to ordinary people, and making it so easy that they don't realize they are using it, is a necessary prerequisite to building the next-generation Internet. Perhaps we need a revival of the cypherpunk movement of the 1990's -- a sort of Cypherpunk 2.0, if you will -- only this time with an emphasis on user interface simplicity that will finally allow cryptographic technology to be accepted by the public and commonly used to provide privacy and authenticity.

The role of writers in injecting a needed dose of imagination and creativity into engineers and entrepreneurs should be taken very seriously. I'm reminded of the story about how the science fiction writer Katherine MacLean, who predicted the role of computers in communication and music in her 1947 novelette Incommunicado, accidentally stumbled into a conference of electrical engineers and found herself quickly surrounded by Bell Telephone researchers who were inspired by her ideas to build the next generation of communications equipment. I hope that the software engineers of today find similar inspiration in the works of visionaries such as Vinge and Stross, and decide that it's time to pull up the shirt sleeves and get to work building the world of tomorrow.

Note: It is my opinion that the use of the above book cover images and excerpts is acceptable under the fair use doctrine in the United States. If you are the author, publisher, or otherwise hold rights to the above materials and believe me to be in error, please contact me with details.

posted at 2008-11-16 01:23:06 US/Mountain

by David Simmons

tags: cypherpunk2.0 sf whatsnext inspiration

permalink

comments