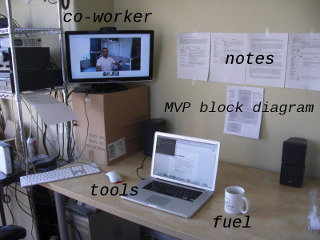

As a work-from-home software engineer, I'm always looking for ways to improve communication with co-workers and clients to help bridge the distance gap. At the beginning of October, a colleague and I decided to devote the month to an extreme collaboration experiment we called Maker's Month. We had been using Google Hangouts for meetings with great effectiveness, so we asked ourselves: Why not leave a hangout running all day, to provide the illusion of working in the same room? To that end, we decided to take our two offices -- separated spatially by 1,000 miles -- and merge them into one with the miracle of modern telecommunications.

We began by establishing some work parameters: We would have a meeting every morning to discuss the goals of the day, then mute our microphones for most of the next 6 to 7 "core office hours" while the hangout was left running. During the day we could see each other working, ask questions, engage in impromptu integration sessions, and generally pretend like we were working under the same roof. At the end of the day, we would have another meeting to discuss our accomplishments, adjust the project schedule, and set goals for the following day. We would then adjourn the hangout and work independently in "offline" mode.

There were a handful of questions we were hoping to answer during the course of this experiment:

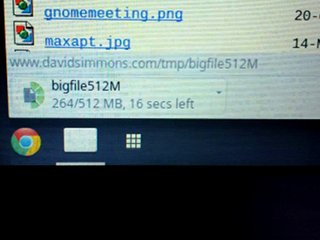

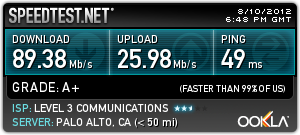

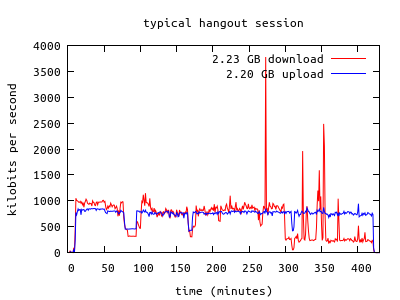

- How much bandwidth would this telepresence cost, in terms of both instantaneous bitrate and total data usage?

- What audio/video gear would give us the best experience, and help avoid the usual trouble areas? (Ad-hoc conferencing setups are notorious for annoying glitches such as remote echo.)

- Would Google even allow us to keep such long-duration hangouts running, or to use such a large number of hangout-hours in a month? (Unlike peer-to-peer protocols such as RTP/WebRTC/etc., hangout media streams are actually switched in the cloud and consume the CPU/bandwidth resources of Google.)

- Do extended telepresence sessions provide real value to software development teams?

While Google Hangouts supports up to nine people in a hangout, our experiment only involved two people. (Our initial plans to bring a third team member into the hangout never materialized.)

Technical details

This wouldn't be a proper Caffeinated Bitstream post without some graphs and figures, so here are some charts showing the overall bandwidth usage:

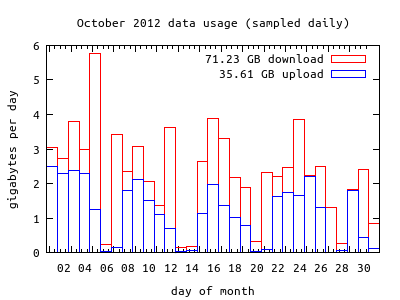

The first chart shows the bandwidth usage of a typical two-person hangout session, which uses about 750-1000 kbps in each direction (when the connection settings are configured for "fast connection"). The aberrations in the chart are due to changing hangout parameters (i.e. screen sharing instead of video, or the remote party dropping off.) The second chart shows the bandwidth usage for my house during the month of October. The hangout sessions are likely the bulk of this usage, but it also includes occasional movie streaming, Ubuntu downloads, software updates, and such. I sometimes hear people comment that the bandwidth caps imposed by some internet service providers can't be exceeded by legitimate use of the network, but I can easily imagine many telepresence scenarios that would quite legitimately push users over the limit. Fortunately, our usage is fairly modest, and my provider doesn't impose caps, anyway.

My hangout hardware consists of:

- A desktop computer with a quad-core Core i7 920 2.67Ghz processor and 8GB of RAM, running Ubuntu Linux

- A dedicated LCD monitor

- A Logitech HD Pro Webcam C910

- A Blue Yeti microphone

- A stereo system with good speakers, for audio output.

I've occasionally run Google Hangouts on my mid-2010 MacBook Pro, but the high CPU usage eventually revs up the fan to an annoying degree. The desktop computer doesn't seem to noticeably increase its fan noise, although I do have it tucked away in a corner. I've found that having a dedicated screen for the hangout really helps the telepresence illusion. The Yeti microphone is awesome, but the C910's built-in microphone is also surprisingly great. In fact, my colleague can't tell much of a difference between the two. I've noticed that the use of some other (perhaps sub-standard) microphones seems to thwart the echo cancellation built-in to Google Hangouts, resulting in echo that makes it almost impossible to carry on a conversation.

In addition to its thirst for bandwidth, Google Hangouts also demands a hefty chunk of processor time (and thus, power usage) on my equipment:

| system | cpu usage | quiescent power | hangout power | hangout power increase |

| 4-core Core i7 920 2.67Ghz desktop | 62% | 75W | 80W | 5W |

| 2-core Core i7 2.66Ghz mid-2010 MacBook Pro | 77% | 13W | 38W | 25W |

(Note: CPU usage is measured such that full usage of a single core is 100%. The usage is the sum of various processes related to delivering the hangout experience. On Linux: GoogleTalkPlugin, pulseaudio, chrome, compiz, Xorg. On Mac: GoogleTalkPlugin, Google Chrome Helper, Google Chrome, WindowServer, VDCAssistant. Power was measured with an inline Kill A Watt meter.)

I figure that using my desktop machine for daily hangouts has a marginal electrical cost of around $0.06/month. (Although keeping this desktop running without suspending it is probably costing me around $4.74/month.) Changing the hangout settings to "slow connection" roughly reduces the CPU usage by half.

Why does Google Hangouts use so much CPU and bandwidth? I think it all comes down to the use of H.264 Scalable Video Coding (SVC), a bitrate peeling scheme where the video encoder actually produces multiple compressed video streams at different bitrates. The higher-bitrate streams are encoded relative to information in the lower-bitrate streams, so the total required bitrate is fortunately much less than the sum of otherwise independent streams, but it is higher than a single stream. The "video switch in the cloud" operated by Google (or perhaps Vidyo, the provider of the underlying video technology) can determine the bandwidth capacity of the other parties and peel away the high-bitrate layers if necessary. Unfortunately, not only does SVC somewhat increase the bandwidth requirements, but it also means that the Google Talk Plugin cannot leverage any standard H.264 hardware encoders that may be present on the user's computer. Thus, a software encoder is used and the CPU usage is high. The design decision to use SVC probably pays off when three people or more are using a hangout.

One downside to using Google Hangouts for extended telepresence sessions is the periodic "Are you still there?" prompt, which seems to appear roughly every 2.5 hours. If you don't answer in the affirmative, you will be dropped from the hangout after a few minutes. Sometimes when I've stepped out of the office for coffee, I'll miss the prompt and get disconnected. I understand why Google does this, though, and reconnecting to the same hangout is pretty easy. Even with our excessive use of Google Hangouts, we haven't encountered any other limits to the service.

Telepresence effectiveness

Video conferencing has always offered some obvious communication advantages, and Google Hangouts is no exception. The experience is much better than talking on the phone, as body language can really help convey meaning. In many ways, it does help close the distance gap and simulate being in the same room: team members can show artifacts (such as devices and mobile phone apps) and see at a glance if other team members are present, absent, working hard on a problem, or perhaps available for interruption. We made heavy use of the screen sharing feature, and even took advantage of the shared YouTube viewing on several occasions. We didn't engage in pair programming in this experiment, although remote pair programming is not unheard of. The biggest benefit of telepresence for geographically distributed teams seems to be keeping team members focused and engaged, as being able to see other team members working can be a source of motivation.

For me, the biggest downside to frequent use of Google Hangouts is the "stream litter" problem: Every hangout event appears in your Google+ stream forever, unless you manually delete it. While it's only visible to the hangout participants, it's really annoying to have to sift through a hundred hangout events while I'm looking for an unrelated post in my Google+ stream. Also, it's sometimes awkward when I want to share the screen from my work computer while using a different computer for the hangout. I end up joining the hangout a second time from my work computer, only to have nasty audio feedback ensue until I mute the microphone and speaker.

Conclusions

I think that using Google Hangouts for extended work sessions adds a lot of value, and I'll continue to use it. It would be interesting to try other video conferencing solutions to see how they compare.

For the impatient people who just scrolled down to "Conclusions" right away, here's the tl;dr:

Pros:- Continuous visual of other team members increases the opportunities for impromptu discussions and helps motivation.

- The "same room" illusion helps close the distance gap associated with telework.

- Good quality audio and video.

- Easily accessible from GMail or Google+.

- Screen sharing.

- Shared YouTube viewing.

- Relatively high (but manageable) bandwidth and CPU requirements.

- Google+ stream littered with hangout events.

- 2.5-hour "Are you still there?" prompt.

- When eating doughnuts in front of team members, can't offer some for everyone.

posted at 2012-11-20 21:51:08 US/Mountain

by David Simmons

tags: hangouts telepresence google video audio

permalink

comments