As the consumer electronics revolution brings more and more of the digital world to handheld devices, the chief constraint developers often face is not bandwidth or CPU cycles, but rather battery life. Since many next-generation applications require creative use of the network, I decided to run a few tests to discover the true battery cost of network use in certain scenarios.

I have an interest in mesh overlay networks, and I'm curious about the cost of mesh maintenance on power-constrained devices. Therefore, these tests explore the use of relatively small network transactions performed at regular intervals. Mobile devices are known to be optimized for aggregated time-adjacent traffic, with the radio wake-up cost leading to a low ROI for small (e.g. one IP packet) transmissions. This could unfortunately be bad news for mesh maintenance, where lots of small transmissions are spread out in time.

Ilya Grigorik's High Performance Browser Networking (O'Reilly Media, 2013) goes into detail about these issues in Chapter 7 "Mobile Networks" and Chapter 8 "Optimizing for Mobile Networks". Some key insights from this work include:

- "The "energy tails" generated by the timer-driven state transitions make periodic transfers a very inefficient network access pattern on mobile networks."

- "Radio use has a nonlinear energy profile with respect to data transferred."

- "Intermittent network access is a performance anti-pattern on mobile networks..."

Test methodology

Earlier this year when I was switching carriers, I found myself with a spare Android handset with LTE service enabled. Seizing the opportunity of having an activated handset that's not saddled with my usual array of chatty apps (mail, Twitter, etc.), I ran a series of tests measuring battery drain in various controlled network conditions.

The handset under test was a Samsung Galaxy Nexus running Android 4.3, equipped with the factory supplied 1850mAh battery. The network connection was provided by Sprint's LTE network. To reduce the amount of unintentional background network traffic, the device was reset to factory defaults and not associated with any Google account.

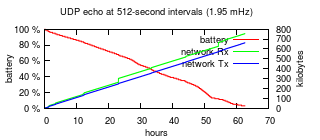

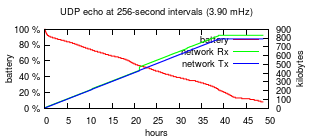

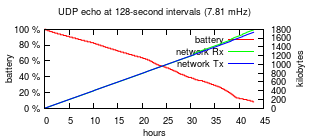

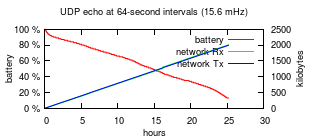

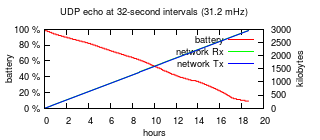

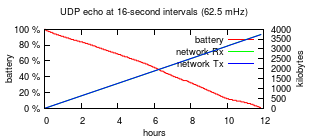

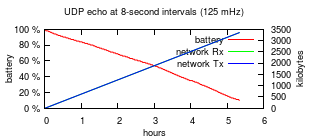

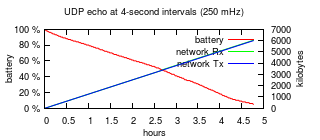

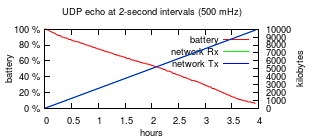

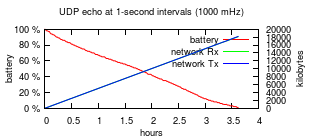

I developed an app to perform network traffic at a specified interval and record the battery level and network counters each minute. This app sends a 1400-byte UDP packet as an echo request to a cloud server, where a small Python script verifies the authenticity of the request and returns a 1400-byte UDP echo response packet. In this fashion, network traffic should be roughly balanced between upload and download, minus any occasional packet loss.

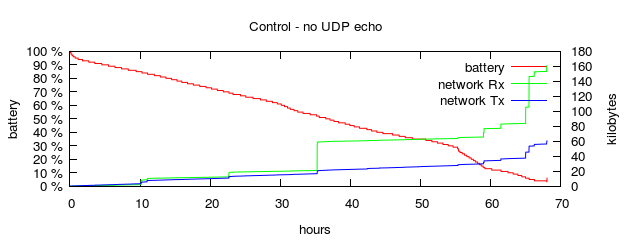

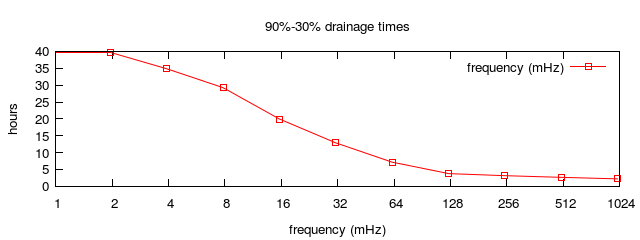

To judge the overall battery usage for an individual test with a specific network transaction frequency, I measured the time elapsed while the battery drained from 90% to 30%. (Battery usage was seen to have some aberrations above 90% and below 30%, so such data was discarded for the purpose of calculating drainage times.)

Caveats

This is not a fully controlled laboratory test or representative of a broad range of devices and networks, but rather a "best-effort only" test using the equipment at hand. Thus, it's important to keep in mind a number of caveats:

- The LTE signal strength is not guaranteed to be constant throughout the test. I tried to minimize the variation by always performing tests with the handset in the same physical location and orientation, but there are many factors out of my control. A lower signal strength requires the radio to transmit with higher power to reach the tower, so this could add noise to the data.

- LTE is something of a black box to me, so any peculiarities of the physical and link layers are not taken into account. For example, are there conditions that may prompt the connection to shift to a different band with different transmit power requirements?

- Other wireless providers may use LTE in different frequencies or configurations which may affect the battery usage in different ways.

- Android's background network traffic could not be 100% silenced, and I did not go to extraordinary lengths to track down every last built-in app that occasionally uses the network. However, this unintentional traffic should be fairly negligible.

- This test only considers one specific mobile device with one operating system. Other models will have radios with different power usage characteristics.

- Wi-Fi use is not tested.

Results

Note that the echo frequency above is in millihertz (mHz), not megahertz (MHz) — 1000mHz is 1 echo request/response per second.

Conclusion

One surprising result was the battery longevity in the control test. While most of us have grown accustomed to charging our mobile devices every day, it turns out that with minimal network activity, they can last quite a long time indeed. In this case, the Galaxy Nexus lasts almost three days while associated with an LTE tower.

As expected, the relationship between periodic network use and battery drainage is non-linear. For example, doubling the transaction frequency — say, from 128-second intervals to 64-second intervals — doesn't halve the 90-30% drain time; it only lowers it by 32%. Additionally, there seems to be a leveling out around 8-second (and shorter) intervals. Perhaps certain radio components never power down with such frequent transmissions.

Overall, the situation looks pretty grim for mobile devices being full, continuous participants in mesh overlay networks. The modest bandwidth needs of such applications are overshadowed by the battery impact of using the network in little sips throughout the day. Perhaps a system where all participants agreed to a synchronized schedule for mesh maintenance activities could mitigate the problem, but the benefits are not clear when combined with real-time mesh events instigated by remote users (say, a Kademlia node lookup).

It might be interesting to evaluate the impact of periodic network use with Wi-Fi, or investigate the techniques used by platform push systems such as Google Cloud Messaging and the Apple Push Notification service.

posted at 2014-10-06 14:53:47 US/Mountain

by David Simmons

tags: mobile mesh network

permalink

comments